Hive installation on Windows 10

Hive Introduction

In reference to Hadoop and HBase outline as well installation over Windows environment, already we have been talked and gone through the same in my previous post. We came to know that Hadoop can perform only batch processing, and data will be accessed only in a sequential manner. It does mean one has to search the entire data-set even for the simplest of jobs.

In such scenario, a huge data-set when processed results in another huge data set, which should also be processed sequentially. At this point, a new solution is needed to access any point of data in a single unit of time (random access). Here the HBase can store massive amounts of data from terabytes to petabytes and allows fast random reads and writes that cannot be handled by the Hadoop.

HBase is an open source non-relational (NoSQL) distributed column-oriented database that runs on top of HDFS and real-time read/write access to those large data-sets. Initially, it was Google Big Table, afterwards it was re-named as HBase and is primarily written in Java, designed to provide quick random access to huge amounts of the data-set.

Next in this series, we will walk through Apache Hive, the Hive is a data warehouse infrastructure work on Hadoop Distributed File System and MapReduce to encapsulate Big Data, and makes querying and analyzing stress-free. In fact, it is an ETL tool for Hadoop ecosystem, enables developers to write Hive Query Language (HQL) statements very similar to SQL statements.

Hive Installation

In brief, Hive is a data warehouse software project built on top of Hadoop, that facilitate reading, writing, and managing large datasets residing in distributed storage using SQL. Honestly, before moving ahead, it is essential to install Hadoop first, I am considering Hadoop is already installed, if not, then go to my previous post how to install Hadoop on Windows environment.

I went through Hive (2.1.0) installation on top of Derby Metastore (10.12.1.1), though you can use any stable version.

Download Hive 2.1.0

- https://archive.apache.org/dist/hive/hive-2.1.0/

Download Derby Metastore 10.12.1.1

- https://archive.apache.org/dist/db/derby/db-derby-10.12.1.1/

Download hive-site.xml

- https://mindtreeonline-my.sharepoint.com/:u:/g/personal/m1045767_mindtree_com1/EbsE-U5qCIhIpo8AmwuOzwUBstJ9odc6_QA733OId5qWOg?e=2X9cfX

- https://drive.google.com/file/d/1qqAo7RQfr5Q6O-GTom6Rji3TdufP81zd/view?usp=sharing

STEP - 1: Extract the Hive file

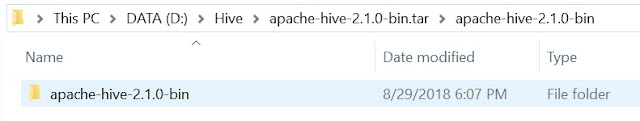

Extract file apache-hive-2.1.0-bin.tar.gz and place under "D:\Hive", you can use any preferred location –

[1] You will get again a tar file post extraction –

[2] Go inside of apache-hive-2.1.0-bin.tar folder and extract again –

[3] Copy the leaf folder “apache-hive-2.1.0-bin” and move to the root folder "D:\Hive" and removed all other files and folders –

STEP - 2: Extract the Derby file

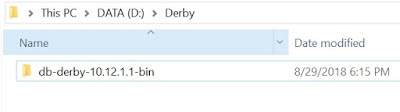

Similar to Hive, extract file db-derby-10.12.1.1-bin.tar.gz and place under "D:\Derby", you can use any preferred location –

STEP - 3: Moving hive-site.xml file

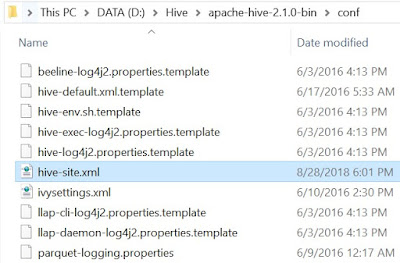

Drop the downloaded file “hive-site.xml” to hive configuration location “D:\Hive\apache-hive-2.1.0-bin\conf”.

STEP - 4: Moving Derby libraries

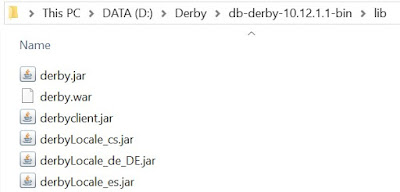

Next, need to drop all derby library to hive library location –

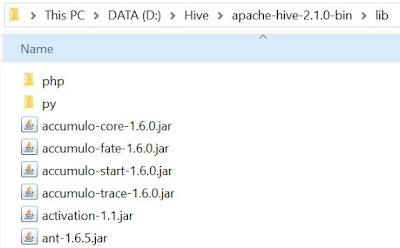

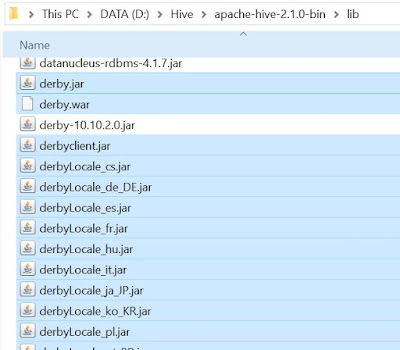

[1] Move to library folder under derby location D:\Derby\db-derby-10.12.1.1-bin\lib.

[2] Select all and copy all libraries.

[3] Move to library folder under hive location D:\Hive\apache-hive-2.1.0-bin\lib.

[4] Drop all selected libraries here.

STEP - 5: Configure Environment variables

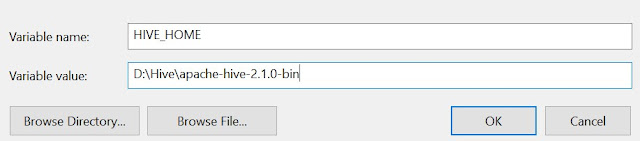

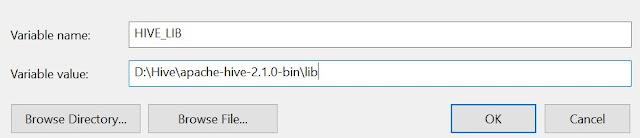

Set the path for the following Environment variables (User Variables) on windows 10 –

- HIVE_HOME - D:\Hive\apache-hive-2.1.0-bin

- HIVE_BIN - D:\Hive\apache-hive-2.1.0-bin\bin

- HIVE_LIB - D:\Hive\apache-hive-2.1.0-bin\lib

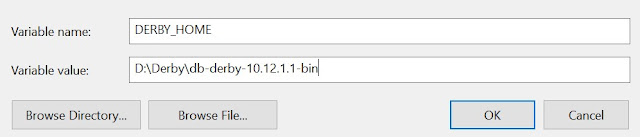

- DERBY_HOME - D:\Derby\db-derby-10.12.1.1-bin

- HADOOP_USER_CLASSPATH_FIRST - true

This PC - > Right Click - > Properties - > Advanced System Settings - > Advanced - > Environment Variables

STEP - 6: Configure System variables

Next onward need to set System variables, including Hive bin directory path –

HADOOP_USER_CLASSPATH_FIRST - true

Variable: Path

Value:

- D:\Hive\apache-hive-2.1.0-bin\bin

- D:\Derby\db-derby-10.12.1.1-bin\bin

STEP - 7: Working with hive-site.xml

Now need to do a cross check with Hive configuration file for Derby details –

- hive-site.xml

[1] Edit file D:/Hive/apache-hive-2.1.0-bin/conf/hive-site.xml, paste below xml paragraph and save this file.

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:derby://localhost:1527/metastore_db;create=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>org.apache.derby.jdbc.ClientDriver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>hive.server2.enable.impersonation</name>

<description>Enable user impersonation for HiveServer2</description>

<value>true</value>

</property>

<property>

<name>hive.server2.authentication</name>

<value>NONE</value>

<description> Client authentication types. NONE: no authentication check LDAP: LDAP/AD based authentication KERBEROS: Kerberos/GSSAPI authentication CUSTOM: Custom authentication provider (Use with property hive.server2.custom.authentication.class) </description>

</property>

<property>

<name>datanucleus.autoCreateTables</name>

<value>True</value>

</property>

</configuration>

STEP - 8: Start the Hadoop

Here need to start Hadoop first -

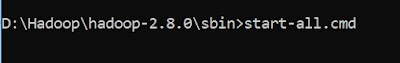

Open command prompt and change directory to “D:\Hadoop\hadoop-2.8.0\sbin" and type "start-all.cmd" to start apache.

It will open four instances of cmd for following tasks –

- Hadoop Datanaode

- Hadoop Namenode

- Yarn Nodemanager

- Yarn Resourcemanager

It can be verified via browser also as –

- Namenode (hdfs) - http://localhost:50070

- Datanode - http://localhost:50075

- All Applications (cluster) - http://localhost:8088 etc.

Since the ‘start-all.cmd’ command has been deprecated so you can use below command in order wise -

- “start-dfs.cmd” and

- “start-yarn.cmd”

STEP - 9: Start Derby server

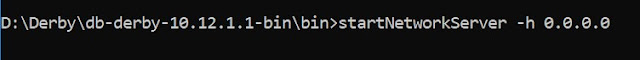

Post successful execution of Hadoop, change directory to “D:\Derby\db-derby-10.12.1.1-bin\bin” and type “startNetworkServer -h 0.0.0.0” to start derby server.

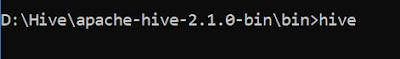

STEP - 10: Start the Hive

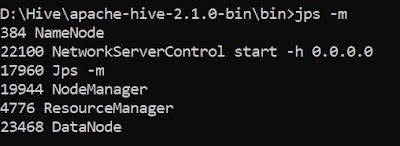

Derby server has been started and ready to accept connection so open a new command prompt under administrator privileges and move to hive directory as “D:\Hive\apache-hive-2.1.0-bin\bin” –

[1] Type “jps -m” to check NetworkServerControl

[2] Type “hive” to execute hive server.

Congratulations, Hive installed !! 😊

STEP-11: Some hands on activities

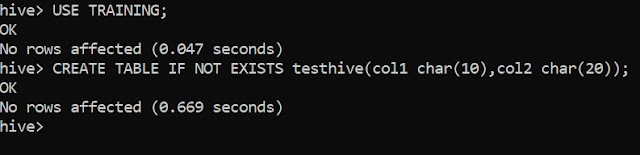

[1] Create Database in Hive -

CREATE DATABASE IF NOT EXISTS TRAINING;

[2] Show Database -

SHOW DATABASES;

[3] Creating Hive Tables -

CREATE TABLE IF NOT EXISTS testhive(col1 char(10), col2 char(20));

[4] DESCRIBE Table Command in Hive -

Describe Students

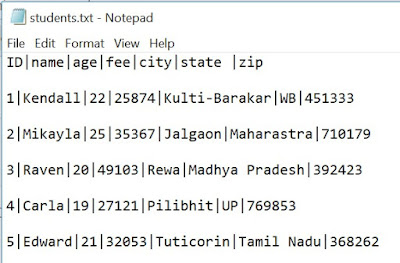

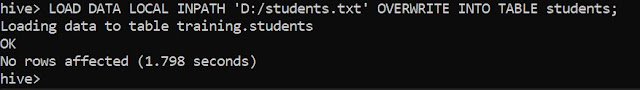

[5] Usage of LOAD Command for Inserting Data Into Hive Tables

Create a sample text file using ‘|’ delimiter –

[6] Hive Select Data from Table -

SELECT * FROM STUDENTS;

Stay in touch for more posts.

No comments:

Post a Comment